The all-in-one control panel for enterprise GenAI. Built to maximize infrastructure performance, minimize total cost of ownership, and give you end-to-end control from deployment and administration to compliance and security. Engineered to enable both technical and non-technical teams alike.

A performant model deployment alone is not enough to ensure the success of your GenAI initiatives. You must have;

You can stitch together 100 different tools and still come up short—or you can use Bud AI foundry and get it right from day one.

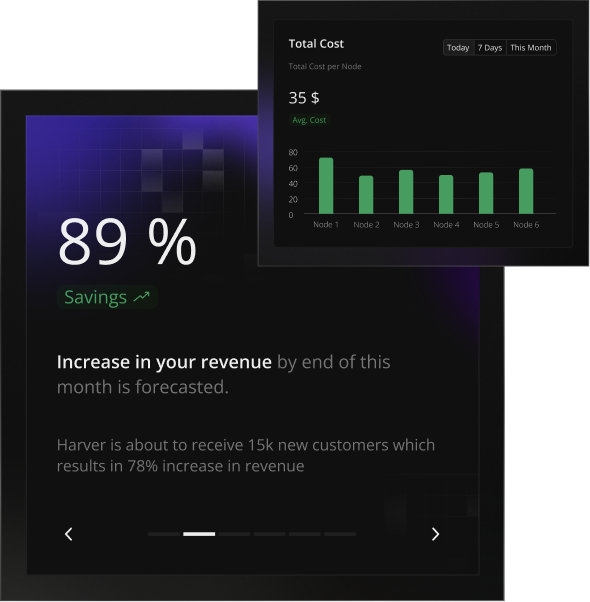

Automated cost optimisation for model deployments and cost-aware deployment scaling.

Budgeting, rate limiting, and usage limits across projects, models, users, teams, use cases, and agents.

Simulator that helps you find the most cost optimal hardware and deployment settings.

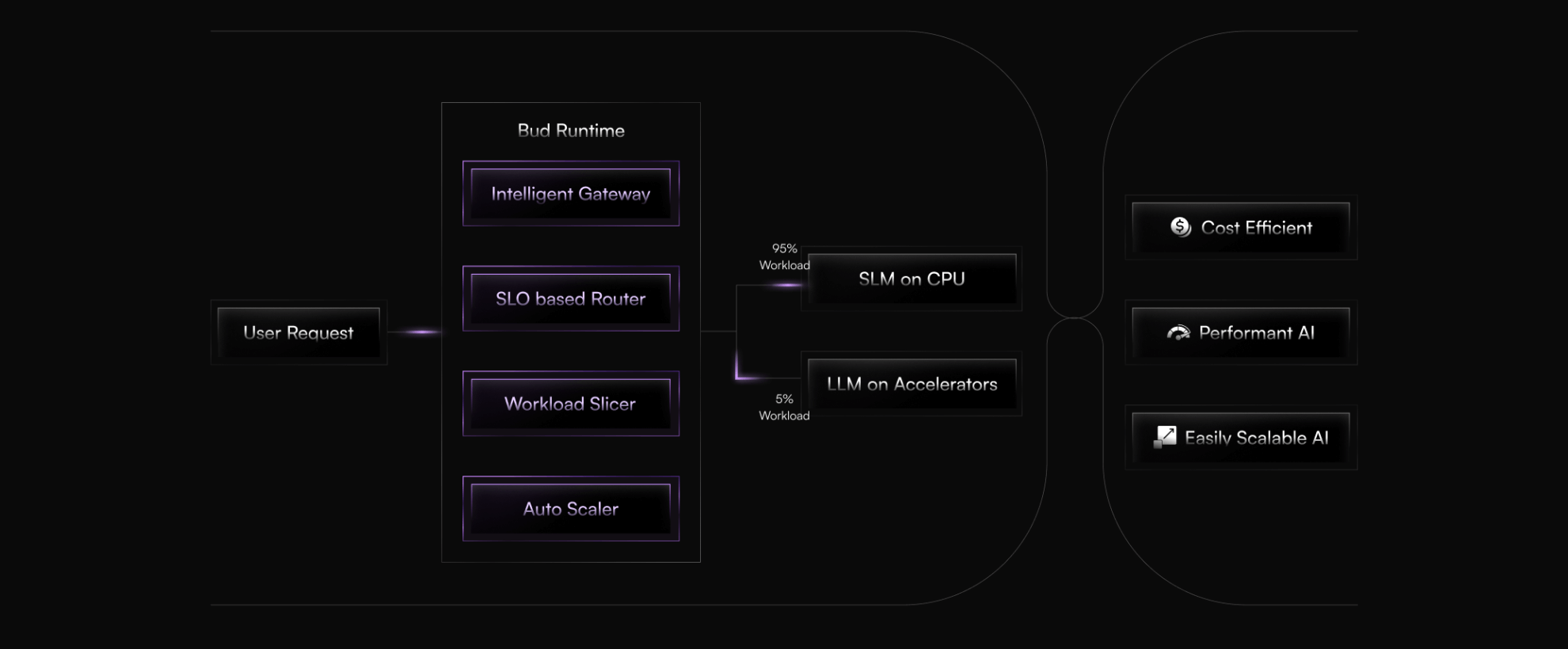

Automatically routes requests to the most cost-effective model that meets SLO requirements.

Automatically optimizes performance across models, workloads, and hardware using optimal parallelism, quantization, and execution strategies.

Delivers the fastest AI gateway in the industry, ensuring ultra-low latency of under 1 ms for real-time AI inference and interactions.

Enables shared, distributed key–value caching to reduce latency and improve throughput across concurrent GenAI workloads.

Delivers ~3X higher performance on NVIDIA GPUs and ~1.5X on other accelerators. 12X faster cold starts.

Provides multi-layered guardrails across all layers with less than 10 ms (SOTA) added latency.

Supports regex, fuzzy matching, bag-of-words, classifier models, and LLM-based scanning.

Leverage off-the-shelf probes or create custom ones using datasets or Bud's symbolic AI expressions.

Deploy guardrails on commodity CPUs with up to 100X better performance than A100 GPUs, maintaining the same level of accuracy.

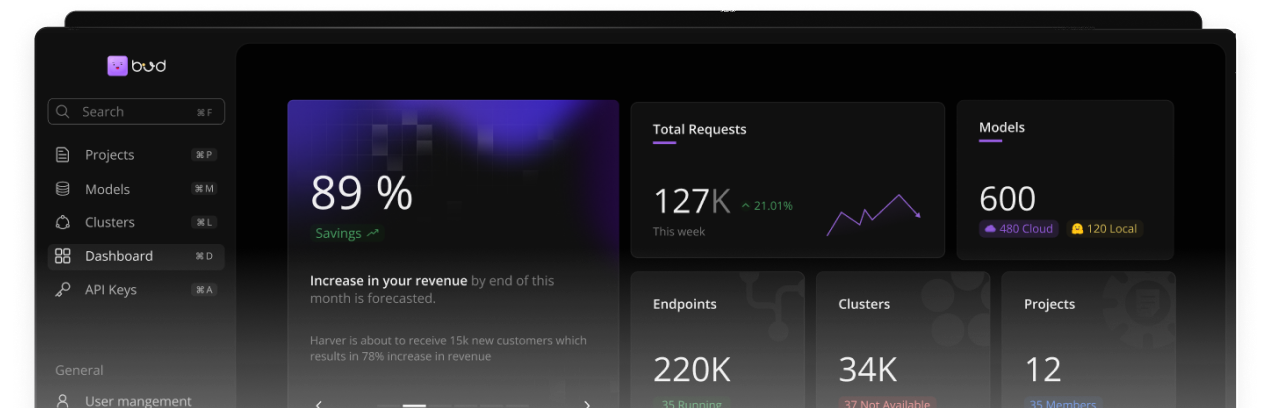

Track latency, cost, accuracy, and resource usage across all models, agents, teams projects and clusters efficiently.

Maintain complete traceability for prompts, responses, queries, model usage, model versions, and all access events securely.

Monitor AI consumption per user, team, and application to optimize resource allocation and efficiency.

Continuously measure and enforce performance and accuracy SLOs to ensure reliable AI operations.

Easily design agents and workflows without coding, enabling faster prototyping and deployment.

Start quickly with ready-to-use agent and workflow templates tailored for common use cases.

Configure complex logic, triggers, and actions to build workflows precisely suited to your needs.

Design end-to-end agent workflows with prompts, tools, guardrails, and memory, connect 1000+ tools and MCPs with built-in orchestration.

Easily benchmark models for accuracy, latency, and cost before production rollout. Includes 300+ built-in benchmarks.

Run controlled experiments across models, prompts, and agents to identify optimal performance and strategies.

Safely test workloads using synthetic traffic and datasets, reducing risk before live deployment.

Automatically re-evaluate models and agents as data, usage patterns, and requirements evolve over time.

Assign view or management permissions to admins, users, consumers, and developers across modules, projects, APIs, and individual tasks.

Seamlessly integrates with Okta, Azure AD, and Google Workspace using OpenID Connect, OAuth 2.0, or SAML 2.0.

Supports LDAP or Microsoft Active Directory to keep users, groups, and attributes up-to-date automatically.

Connects with enterprise identity providers so users authenticate once across all modules.

Ensures full compliance with industry standards, including White House and EU AI guidelines, GDPR, SOC2, for secure and trustworthy AI operations.

Bud's security framework that ensures zero-trust security for model downloads, deployments, and inference operations.

Granular role-based permissions and policy enforcement protect sensitive data and workflows.

Continuous logging, monitoring, and alerting provide full traceability for security and compliance.

Runs on GPU, CPU, HPU, TPU, NPU, accelerators across different vendors and deploys across 12+ clouds, private data centers, or edge.

Comes with Bud models, supports open-source or proprietary models and offers OpenAI-like APIs and SDKs for frictionless migration.

Automatic & Heterogeneous SLO-aware scaling that instantly scales models, agents, and tools across different hardware without manual tuning.

Schedules workloads across clusters, multi-region deployments, and ensures failover with disaster recovery.

Custom SLMs, LLMs, Vision models, Code models, Audio models, embedding models tailored for specific industries and use cases.

Ready-to-use agents for common workflows, including RAG applications and typical business tasks.

Built-in Bud models that support text, images, audio, and other modalities for versatile AI interactions.

Efficient models and agents designed for fast, accurate, and scalable deployments.

OpenAI-like platform for creating, sharing, and consuming agents and prompts effortlessly.

Centralized dashboards to create custom projects, monitor usage, and track model performance.

Intuitive chat interface for testing models, performing qualitative analysis, and exploring AI capabilities.

Consumer-friendly chat interface providing seamless access to AI agents and tools.

Instantly deploy models and agents without any manual infrastructure setup.

Automatically handle quantization, kernel selection, and performance tuning for maximum efficiency based on model, workload and hardware.

Scale to zero and burst on demand with fast cold starts.

Seamlessly move from experimentation to production with a single click. Built in Deployment templates for optimal performance.

Monitor real-time AI spending across models, agents, teams, and use cases for better budgeting.

Define limits, receive alerts, and enforce policies to prevent unexpected cost overruns effectively.

Automatically scale resources based on cost-performance trade-offs, ensuring efficient AI operations without overspending.

Achieve up to 6X better total cost of ownership, validated by enterprise benchmarks and case studies.

Our proprietary FCSP method partitions GPU resources efficiently across multiple models and agents for maximum utilization.

Mix and match CPUs, GPUs, and HPUs to deploy models efficiently using the hardware you already have.

Built on Dapr with production-ready, scalable agent features, supporting protocols like A2A and ACP.

Built-in support for MCP with discovery, orchestration, and virtual MCP servers, including 400+ prebuilt MCPs.

In a GenAI implementation at Infosys, replacing cloud-based LLMs with self-hosted, open-source SLMs—deployed through Bud AI Foundry and running on CPUs—resulted in over a 90% cost reduction while still meeting the required SLOs.

Requirements change dramatically as teams move from experimentation to pilot, production, and scale. BudAI Foundry is built to meet you at each stage so you never have to rebuild or switch stacks.

With Bud Runtime's heterogeneous hardware parallelism, you can seamlessly mix and match available hardware to optimize your deployments. Yes, GPU scarcity will no longer be a bottleneck.

Using open-source models can pose a business risk, as they may contain hidden malware. Bud SENTRY—Secure Evaluation and Runtime Trust for Your Models—is a zero-trust model ingestion framework that ensures no untrusted models enter your production pipeline without rigorous checks.

Our Deployment Simulator helps you identify the most cost-effective and high-performance configuration for your GenAI deployment. By simulating different models, hardware, and use cases, you can accurately estimate ROI and make data-driven decisions—without the need for any initial investment.

Deploy to any environment with zero configuration changes for true portability.