We open-sourced SIMD-Bench, an open-source framework that benchmarks and profiles SIMD kernels to evaluate and compare their performance across different instruction set architectures (ISAs), CPU platforms and CPU vendors. Unlike existing profilers that focus on single platforms or shallow metrics, SIMD-Bench combines reproducible cross-architecture benchmarking, roofline and top-down microarchitectural analysis, correctness validation, and energy metrics to deliver actionable, low-level insights that translate directly into reliable, portable performance gains.

Why we built SIMD-Bench

In most GenAI model inference systems, CPU overhead is a major bottleneck. It often prevents full GPU utilization, which in turn increases latency and cost. To address this, we typically use SIMD kernels to parallelize CPU tasks such as tokenization, post-processing, and serialization. We typically use SIMD kernels to parallelize and improve the performance of CPU-based tasks such as tokenization, post-processing, and serialization.

However, the major challenge in SIMD kernel development is ensuring that the kernels we write are portable across different ISAs and CPU vendors. Frameworks like Google Highway allow us to mitigate this to some extent, but profiling and testing these kernels became an even bigger challenge, as we could not find a portable, cross-platform profiler. So, we built one, which led to SIMD-Bench.

Another challenge that SIMD Bench addresses is providing a rule-based kernel analysis tool and insights provider, which enables easy bottleneck identification and offers actionable recommendations for common performance issues. This ensures that even intermediate SIMD developers can understand potential ways to improve the performance of a kernel after profiling.

👉 GitHub : SIMD-Bench

What is SIMD ?

SIMD (Single Instruction, Multiple Data) is a parallel computing architecture where a single instruction operates simultaneously on multiple data points. Unlike scalar processing, which handles one data element at a time, SIMD can process vectors of data in parallel, making it highly efficient for tasks with repetitive computations. It accelerates performance in workloads like image processing, scientific simulations, and linear algebra by reducing instruction overhead and improving data throughput. Modern CPUs implement SIMD through vector registers and instructions (e.g., AVX, NEON). In Generative AI inference, SIMD significantly reduces CPU overhead by parallelizing tasks like tokenization, post-processing, and serialization. This allows GPUs to operate at higher utilization, resulting in faster inference, lower latency, and reduced operational costs, while tools like SIMD-Bench help optimize and analyze these kernels across different CPU architectures.

The challenges in perfecting SIMD Kernels

SIMD boosts modern CPU’s performance by allowing a single instruction to process multiple data elements in parallel, greatly improving computational throughput and efficiency. However, subtle differences in microarchitecture, memory behavior, and instruction choices can turn a seemingly optimal SIMD kernel into a performance mystery—one that behaves differently across CPUs and ISAs. SIMD engineers quite often ends up with questions like;

- Why is my SIMD kernel slower than expected?

- Why did this optimization help on one CPU but hurt on another?

- Why does this kernel regress on ARM?

- Is my kernel compute-bound or memory-bound?

- Should I use AVX-512 or AVX2?

These questions are just the tip of the iceberg. Without detailed insight, developers are left guessing, making optimization slow, error-prone, and hardware-specific.

No unified way to compare kernel performances

Performance of SIMD kernels depends on many factors such as vector width, memory alignment and layout, cache behavior, instruction mix, pipeline utilization, frequency throttling, power and thermal limits and compiler decisions. The same kernel can behave differently in different hardware.

For example, a SIMD kernel optimized for Intel AVX-512 may perform worse when run on other architectures such as AMD AVX2, ARM NEON, or ARM SVE. Currently, there is no unified way to compare kernel performances across different CPUs and ISAs, or to clearly understand why their performance and behavior differ.

Hard to get insights on kernel performance issues

SIMD engineers often face complex performance bottlenecks that are not obvious just from looking at code or raw metrics. For example, engineers frequently turn to performance counters to understand how well their code is running. They check metrics like L1, L2, or last-level cache (LLC) miss rates, memory bandwidth utilization, instructions per cycle (IPC), and vectorization ratios. At first glance, these numbers seem like clear indicators of performance—but they rarely tell the whole story.

Take IPC, for example. A low IPC signals inefficiency, but it doesn’t reveal the cause. Is the processor waiting on slow memory? Are there not enough independent instructions to exploit instruction-level parallelism? Or are frequent branch mispredictions causing pipeline flushes? Without digging deeper, there’s no way to know.

Similarly, a high cache miss rate hints at poor data locality, but it doesn’t specify whether reorganizing data structures, applying loop tiling, or changing access patterns would make a meaningful difference. Memory bandwidth utilization can appear normal, yet hidden latency-bound stalls might still throttle performance.

The result is a tangle of numbers and vague signals. Engineers can spend hours—or even days—trying to untangle the interactions between code behavior and hardware performance, piecing together a story that the raw counters alone cannot tell. Each metric provides a clue, but without context, they’re just fragments of a puzzle.

SIMD-Bench

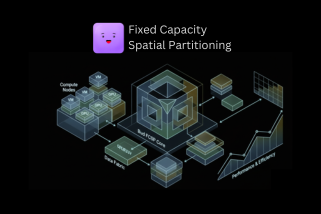

SIMD bench is developed to address all these bottlenecks. It is a comprehensive framework for profiling, benchmarking, and iteratively improving SIMD kernels across multiple hardware platforms. It provides a holistic view of kernel performance by integrating timing, hardware performance counters, energy profiling, and automated microarchitectural analysis.

Key Features

- Cross-Platform Support: Native support for x86 (SSE/AVX/AVX-512), ARM (NEON/SVE), and RISC-V (RVV) via Google Highway.

- Holistic Metrics: Covers performance (GFLOPS, IPC), SIMD efficiency, memory bandwidth, and cache utilization.

- Built-in Analysis Engines

- Roofline Model: Automated arithmetic intensity bounds analysis.

- Top-Down Microarchitecture Analysis (TMA): Identifies bottlenecks in the pipeline (Frontend, Backend, Retiring, Bad Speculation).

- Insights Provider: A rule-based engine that provides actionable optimization recommendations.

- Energy Profiling: Integrated RAPL/PowerAPI support for measuring power consumption and energy efficiency.

- Correctness Verification: Automated numerical accuracy checks against reference scalar implementations.

- Flexible Reporting: Generates reports in JSON, Markdown, and HTML formats.

Metrics Framework

SIMD-Bench tracks a wide array of metrics to provide a 360-degree view of kernel performance.

1. Performance Metrics

- GFLOPS/GOPS: Throughput of floating-point and integer operations.

- IPC/CPI: Instructions per cycle and cycles per instruction.

- Latency: Time to process a single element (measured via RDTSC).

2. SIMD-Specific Metrics

- Vectorization Ratio: Percentage of packed vs. scalar instructions.

- Vector Capacity Usage: Efficiency of SIMD lane utilization.

- SIMD Efficiency: Achieved speedup vs. theoretical maximum for the target ISA.

3. Memory & Cache Metrics

- Arithmetic Intensity: FLOPs per byte transferred (crucial for Roofline analysis).

- Bandwidth Utilization: Percentage of peak memory bandwidth achieved.

- Cache Hit/Miss Rates: Detailed breakdown for L1, L2, and LLC.

4. Energy Metrics

- Package/Core/DRAM Power: Real-time power consumption via RAPL.

- Energy per Op: Efficiency metric (nJ/FLOP).

- Energy-Delay Product (EDP): Combined performance and energy metric.

Insights Provider

The Insights Provider is one of the most powerful features of SIMD-Bench. It uses an advanced rule-based engine to automatically analyze collected metrics and provide actionable optimization recommendations tailored to your specific hardware and kernel characteristics. The Insights Engine consists of multiple components working together:

- Rule-Based Analyzer: Over 16 specialized rules that examine different performance aspects.

- Classification System: Categorizes insights by type, severity, and confidence level.

- Hardware-Aware Thresholds: Adapts recommendations based on detected CPU capabilities.

- Cross-Kernel Analysis: Identifies patterns across multiple benchmarks.

Use cases

Automating SIMD Kernel generation

For example, in GenAI workloads, such as LLM inference, CPUs perform massive matrix multiplications where high-performance SIMD kernels are critical. Manually optimizing kernels for each CPU architecture (x86 AVX2/AVX-512, ARM NEON/SVE, RISC-V RVV) is slow, error-prone, and non-portable. Automating SIMD kernel creation can be achieved using SIMD-Bench, a kernel variant generator,SIMD-bench profiler, and an insights generator. Candidate kernels are generated with variations in vector width, loop structure, instruction choice, and data layout. The profiler measures detailed metrics—IPC, GFLOPS, cache behavior, memory bandwidth, SIMD efficiency, and energy consumption—on target hardware. The insights engine interprets these metrics, identifies bottlenecks, and provides actionable optimization guidance. This feedback drives an iterative refinement loop, adjusting kernel structure and ISA-specific features until optimal performance is reached. By combining generation, profiling, and insight-driven analysis, developers can automatically produce highly efficient, portable SIMD kernels tailored to diverse CPU architectures, accelerating LLM workloads while minimizing energy and latency.

Profile driven Just in Time compilers

Profile-driven Just-in-Time compilers (JIT) generate optimized machine code at runtime, often producing SIMD kernels for high-performance workloads like ML or GenAI. Achieving peak performance is challenging because SIMD kernels behave differently across architectures, and optimal vectorization, loop unrolling, and memory layouts depend on runtime data and hardware behavior. SIMD-Bench can be integrated into a JIT workflow to address these challenges. When the JIT generates a candidate kernel, SIMD-Bench profiles it in real time, collecting metrics such as IPC, SIMD efficiency, cache behavior, memory bandwidth, and energy usage. Its Insights Provider analyzes these metrics to identify bottlenecks—underutilized SIMD lanes, memory-bound loops, or pipeline stalls—and provides actionable optimization recommendations. The JIT uses this feedback to iteratively refine kernels, adjusting vector widths, instruction choices, or data layouts until optimal performance and energy efficiency are achieved. SIMD-Bench’s cross-ISA support ensures that kernels are portable and hardware-aware, enabling JITs to generate high-performance SIMD code across diverse CPUs.

What is SIMD-Bench?

SIMD-Bench is an open-source, cross-architecture benchmarking and profiling framework for evaluating SIMD kernels on different ISAs (x86, ARM, RISC-V) and CPU platforms. It integrates performance metrics, microarchitectural analysis, energy profiling, and correctness checks into a unified pipeline.

What problems does SIMD-Bench solve?

SIMD-Bench addresses several challenges developers face when optimizing and comparing SIMD kernels. Kernels can perform very differently across CPU architectures and instruction sets, and traditional profilers often provide limited, single-platform insights. It’s also difficult to determine whether performance bottlenecks stem from vectorization, memory bandwidth, pipeline stalls, or energy inefficiency. SIMD-Bench solves these problems by providing reproducible measurements along with roofline analysis, top-down microarchitecture metrics, and rule-based guidance, helping developers diagnose performance issues rather than simply reporting raw numbers.

Which architectures does SIMD-Bench support?

SIMD-Bench supports a wide range of SIMD architectures, including x86 SIMD (SSE, AVX, AVX-512), ARM SIMD (NEON, SVE), and RISC-V Vector (RVV). This cross-platform support is made possible through the Google Highway abstraction layer, which provides a unified interface for writing and benchmarking SIMD kernels across different ISAs.

How do I know whether I’m compute-bound or memory-bound?

You can determine whether a workload is compute-bound or memory-bound by looking at its arithmetic intensity, measured as operations per byte loaded or stored. If performance improves when you increase CPU clock speed but shows little to no gain with faster memory, the workload is likely compute-bound. Hardware performance counters can provide more precise insight, especially metrics like L1/L2 cache miss rates, memory bandwidth utilization, retired instructions, and CPU cycles. As a rule of thumb, workloads that stream large arrays while performing relatively few operations tend to be memory-bandwidth bound, in which case techniques like SIMD optimization may deliver less benefit than expected.

What’s the #1 mistake people make in SIMD benchmarking?

Benchmarking something other than the kernel itself. Results can become inconsistent if the data is sometimes in cache and sometimes not, or if the timing includes setup costs such as memory allocation, random number generation, or I/O instead of just the core computation. In some cases, the compiler may even optimize the actual work away if the results are unused. Additionally, dynamic frequency scaling or turbo boost changing during the run can skew measurements and lead to misleading conclusions.

How do I stop the compiler from optimising my benchmark away?

To get meaningful benchmark results, you need to ensure the compiler cannot prove that the computed results are unused. This typically means accumulating results into a volatile sink or returning a value that is consumed externally. For array-based workloads, computing a checksum is a common approach, but it should be kept lightweight so the checksum calculation itself does not dominate the runtime. Finally, avoid benchmarking loops where the compiler can constant-fold or otherwise eliminate the entire computation.

7) Why is my SIMD version slower than scalar?

A SIMD version can actually run slower than a scalar version for several common reasons. Often, the workload is memory-bound, and vectorizing it adds extra loads, stores, or shuffle operations. Introducing gathers or scatters can be particularly expensive. Vectorization can also increase register pressure, leading to spills to the stack. Misaligned memory accesses may incur penalties (less common on modern x86, but still possible). Additionally, SIMD can require extra instructions for packing, unpacking, permutations, or horizontal operations. Finally, a poor unroll factor can create front-end bottlenecks or put pressure on the instruction cache, further reducing performance.

Is SIMD-Bench suitable for automated workflows (CI, nightly testing)?

Absolutely — the JSON output and correctness checks make it well-suited to CI integration, regression detection, and automated performance dashboards.

.png)